How Google Handles Outages??????

- Maybe because of GFV servers, some sort of change like config change, because all google friend servers or requests google front ends, etc. because it affected so many services went down like we couldn't access them.

- Maybe an authentication error. (This has less to do with because google still managed to work in incognito mode.)

Let me walk you through how Google manages outages and what is the cause for this outage on December 14, 2020.

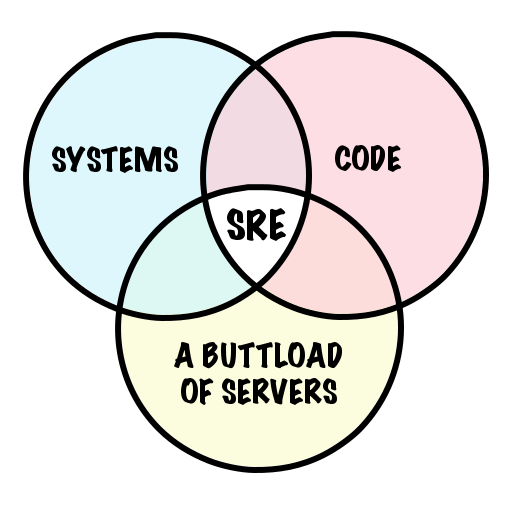

The whole process is managed by SRE(Site reliability engineering) team at Google, SRE team generally uses some software as a tool to manage systems and to automate operations.

(For reference on creating the monitors, this is generally where SRE's are software engineers first, as when they are no on-call, they are generally developing reliability for the products)

Generally, most of the software engineers will be "on-call". "on-call" is a responsibility that availability any time after office hours, I don't know about other companies but in Google, available engineers "on-call" will get paid.

Depending on the severity of the incident, we declare an "outage", which then you open a call bridge where experts and engineers will jump on, and essentially focus on the number one priority which is mitigating the incident - which essentially means fix it, move it around, and do whatever they can (or) have to do so that the customer is no longer experiencing this issue.

Post-outage (or) Post-incident (means linked once it's been mitigated) then will have the blame=less postmortem, this will consist of RCA(Root cause Analysis) where engineers will investigate the cause of the incident/outage, and then create repair items on the device a plan to prevent this from happening again.

All of these incidents, tickets, and bridges are constantly being updated and documented for future reference s, and then when things die down some teams or organization may decide to do a lesson learned brown bag, document, or some other medium of informing engineers on how this happened and how it was prevented.

Note: Thanks to everyone, especially employees who shared some information with me.

Thanks for reading💓

Good work :-)

ReplyDelete